Encoding Architectural Knowledge

New Research from Biomedical Sciences Points to a More AI-Savvy Way to Organize Architectural Knowledge

In This Post:

Sock Bankruptcy

Architecture Firms Clean House, Kinda

A Tradition of Strategic Incompleteness

A Project to End All Projects

A New Method for Detecting Knowledge Deficiencies

Structural Entropy > Structural Incompleteness

Train on Inputs, Not Outputs

Visualizing Project Knowledge

How Does a Robot Draw a Wall Section?

Encoding Architectural Knowledge – A Way Forward

Sock Bankruptcy

Unbeknownst to anyone, I declare "sock bankruptcy" every couple of years. As happens with everyone, my socks come in pairs, and end up as singlets. I’ve always assumed that there’s some kind of sock gremlin that lives in my dryer. I always somehow end up with a bunch of mismatched pairs and singlets that are a regular chore to put together. So, when the Great Sock Disorder reaches critical mass, I throw all my socks away and buy a big 12 pack of socks from [Corporate Behemoth], and start fresh with 24 new, identical socks.

Why not just buy the new socks? Because that ameliorates the problem in the near term, and amplifies it in the long term. If I do that, I have 24 socks that definitely match, and a legacy collection of 20-30 singlets and mismatches. After a while, though, according to the laws of Sock Chaos Theory, I have 35-45 singlets and mismatched pairs. Sock bankruptcy is my small rebellion against chaos, my Sisyphean attempt to impose order on a universe that laughs at all human plans. Whatever horrors the day might bring, at least I know I have my sock situation sorted.

The point is, sometimes it's easier to throw out the legacy system and start fresh. It's a problem that a lot of us are facing, with the rise of Gen AI. Is it better to reverse-engineer our old socks, so that they work with this new AI thing? Or just buy new ones? The old socks certainly have some value – plenty of them are still good socks, and some even are still matching pairs. But adding new, clean content to a problematic system doesn’t always correct the system – it often just makes for a larger, more problematic system.

Architecture Firms Clean House, Kinda

Architecture firms currently face this existential sock drawer moment with their legacy project files. A few firms I know are increasingly convinced that their path to AI mastery runs through all these terabytes of old BIM & CAD files. That seems logical enough: if we want AI to help us design buildings, shouldn't we train it on all the buildings we've already designed?

Seems reasonable. These files represent real projects, real clients, real budgets, and real constraints. They express the firm's design DNA and aggregated knowledge. For any firm, training an AI in such a manner would theoretically produce an AI that designs like, well, the firm itself (for better or for worse).

But this approach faces a fundamental challenge that's deeply embedded in how architects actually work: strategic incompleteness.

A Tradition of Strategic Incompleteness

Over the course of architecture school, I gradually learned to complete only the parts of a studio project that served the ultimate objective: making the jury swoon, gasp, or at minimum, not fall asleep during my Final Review. Out of necessity, I developed the discipline to not fill in every detail. If it's something that won't be seen, understood, or consequential to the final review, you leave it out. When making a Rhino model, I’d decide the positions and angles of my intended final renderings, and then only really develop the model where it would be visible from those vantage points. Some model elements I wouldn't even develop at all, opting instead to do them later in post-production (Photoshop & Illustrator). My seemingly complete, utterly magnificent final boards could be 'complete' while actually consisting of artfully arranged half-developed parts.

As a professional, you're forced into something similar. Any artifacts of the design process can be in varying states of incompleteness, so long as the permit set is complete. That’s what ultimately becomes part of the contract. So why over-detail parts of the BIM model that won't ever make it to the permit set?

But then again, the permit set isn't the ‘complete’ project, either. It only has to be complete by the standards of a permit set. It doesn't contain all the knowledge of the project. It doesn't contain renderings, or meeting minutes, or . . . It just needs to have specific information, in specific form, necessary for the contractor to build the design, and for the authorities to approve it.

The many artifacts of an architect's work are all strategically incomplete, by design, pun very much intended. Not because architects are lazy or incompetent, but because total completeness would be inefficient, expensive, and ultimately unnecessary.

The challenge today is that training an AI on your past projects involves a lot of disparate data types (BIM Models, CAD files, Paper sets, working drawings, notes, etc.) and almost all of them are strategically incomplete. Somewhere, in their sum, is the 'whole project'—and that's what the AI should be learning from.

A Project to End All Projects

All those incomplete BIM Models and CAD files present a significant speed bump on the road to AI adoption, because machines need consistent, regularized, structured data to learn from. Getting old projects machine learning (ML)-ready represents a lot of work. Like, a lot a lot.

Consider a 40-year-old firm that does, on average, 25 projects per year. Founded in 1985, they worked on paper until 1996, when they switched to CAD. They then did CAD projects until 2008 when they switched to BIM. This firm decides to ‘clean up’ all of their legacy project files in order to prepare them for future ML efforts.

Assuming conservative estimates for cleanup time—two weeks to prepare a BIM project for ML, a month for a CAD project, and two months for a paper project—the total effort required is staggering:

(2025 – 2008) x (25 project/year) x (80 hrs)

(2007 – 1996) x (25 project/year) x (170 hrs)

(1995 – 1985) x (25 project/year) x (340 hrs)

= 178,500 total hours, or, about 87.5 years of full time work for 1 person.

That's not a typo. Nearly nine decades of full-time work to retrofit legacy projects for AI training — that's longer than some architectural styles remain fashionable, certainly longer than any firm has before artificial general intelligence renders the whole exercise moot. So how should firms actually approach training their AIs?

A New Method for Detecting Knowledge Deficiencies

A recent paper got me thinking about a completely different approach. "Structural Entropy Guided Agent for Detecting and Repairing Knowledge Deficiencies in LLMs" details a new system called “SENATOR” that addresses exactly this problem of incomplete training data—in this case, for medical AIs. It involves knowledge graphs and Monte Carlo Tree Searches and the sort of nerdy shit that I obsess about when I can’t sleep at 2AM, which is when I write most of these posts.

If you recall from my last post (Convergent Intelligence in Architecture), medical AI models perform worse on global health questions than they do on 'standard' health questions because most of their training data comes from Western sources. Two patients, with the exact same symptoms, may require completely different treatments, if one is from Tokyo and the other from rural Chad. But incomplete or context-specific gaps in training data make AI default to whatever is considered the 'norm' within the training data context. If the AI is trained on data from Japan, and not Chad, the Chadian patient may end up with some fairly misaligned medical advice. Doctors need context to accurately diagnose and treat a patient.

Doctors have a powerful ally in assessing context: medicine’s ‘database of databases’, the Scalable Precision Medicine Open Knowledge Engine (SPOKE). SPOKE is an enormous body of medical data, organized as a knowledge graph. For those new to knowledge graphs, they're simply visual maps of how different pieces of information connect to each other. Think of them as relationship diagrams, but for data. They're composed of nodes (representing things like people, places, concepts) and edges (representing relationships between those things), and they look like this:

SPOKE, though, has 27 million nodes of 21 different types and 53 million edges of 55 types downloaded from 41 databases. For all its vastness, SPOKE suffers the same problem that bedevils all bodies of information: it’s got gaps.

Enter SENATOR, the digital gap-filler. Imagine an ant - a very intelligent, data-savvy ant with a PhD in computer science - crawling along those millions of knowledge pathways, continuously testing the structural stability of each node and edge it encounters. When it detects instability—a missing connection, an anomalous data point, a knowledge gap—it analyzes contextual clues from surrounding information to predict what should fill that gap. It then generates synthetic data to plug the hole, creating a more complete knowledge tapestry.

Human researchers review these synthetic patches to ensure accuracy, creating a human-AI partnership that gradually makes the knowledge base more complete, more reliable, and more contextually aware.

Structural Entropy > Structural Incompleteness

How does our ant determine where a knowledge gap might be? Through something called structural entropy - a way of measuring how much uncertainty exists in the connections between pieces of knowledge. Everything in medicine (or a design process) is connected to multiple other things... the connections could be causal, temporal, categorical, relational, etc.

Following various paths through the system (purple and red), we develop expectations about what should exist at certain intersections. When multiple paths lead to the same conclusion, that strengthens our confidence that the information at that junction is correct. If information is missing where multiple paths converge, we can make educated guesses about what should be there, based on the surrounding context, as with a crossword puzzle:

Your brain has no problem filling in the missing letters, because you know how Scrabble works, and how language works. Filling in any one of the missing letters gives you greater confidence in guessing what the others are. Conflicts suggest instability in the systems (your previous word choices).

Train on Inputs, Not Outputs

Architecture doesn't have a knowledge graph equivalent to SPOKE. But it should. Architectural data, of course, is in many cases much more subjective, which would seem to preclude building a knowledge graph at the scale of the profession. But it wouldn’t preclude doing so at the scale of the firm.

The underlying principle—using a body of incomplete, structured knowledge to guide synthetic data generation, which then fills in the knowledge gaps of the dataset – speaks directly to the challenge currently facing firms as they prepare for AI. Not only does it shortcut the whole 88-year legacy project cleanup problem (and who has 88 years these days? Not me), but it lays a path towards a true artificial architectural intelligence. Training an AI on BIM models, or CAD files, just won’t work.

As I laid out in my last post, A drawing (or a BIM Model), doesn't speak to the intelligence that gave rise to it. The fact that a given line is here, and not there, or is dashed, or solid, extends from the contextual intelligence brought by an architect thinking through a particular, contextual, four-dimensional problem.

If you wanted to train an AI to design, you would want to map the decision-making logic that creates design decisions, rather than the detail that expresses the design decision itself. That would in fact be your only measure of success: can the AI reproduce the same design, independently, when exposed to the same input data (e.g. project constraints, clients, locations, other project conditions). If it took all that input data and created wildly different buildings, it isn't designing like you—which defeats the purpose, unless your goal is to be surprised, in which case you could achieve the same result by hiring recent grads with unconventional portfolios and having unlimited free coffee in the kitchen.

To imbue an AI with decision-making logic, you need all the inputs, and an idea of how they related to each other, and that strongly suggests knowledge graphs.

Visualizing Project Knowledge

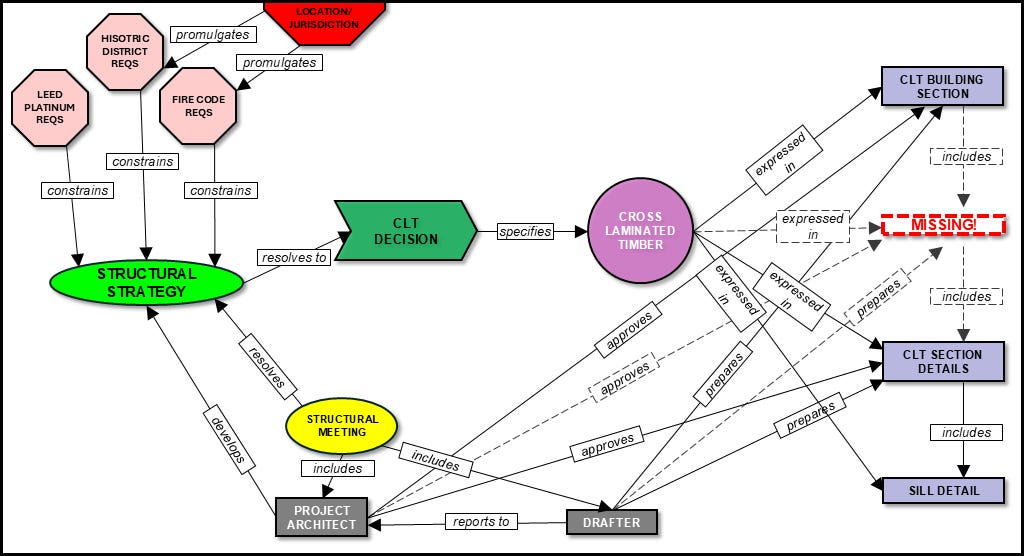

Consider this fictional example of how a firm made a decision on a structural strategy for a boutique hotel in West Oakland:

A wall section drawing (lower right), extracted from the permit set, would only capture the results of the design process. The knowledge graph captures the process that gave rise to the section itself: who was involved, what the constraints were, which institutions or phenomena set the table for those constraints, etc.

An AI, training on such data, now has a roadmap for how to think when it sits down to draw a wall section.

Still, the process of making such a knowledge graph to capture 1000+ projects in a firm's history would seem to run into the same problems as repairing all those old BIM files . . . via strategic incompleteness, there’s still going to be gaps in the knowledge graph, which you will have to identify, and repair.

Well, yes and no. Assuming you had a fairly healthy knowledge graph of all the firm’s projects, a SENATOR-like model would identify and repair those gaps, using contextual knowledge.1

How Does a Robot Draw a Wall Section?

Let’s zoom in on our West Oakland project and imagine a situation where an old permit set is missing a wall section, unbeknownst to us:

To correct this omission the traditional way would require combing through the entire permit set to even discover the missing section (needle, meet haystack), then correcting not just the section itself but everything it connects to: the BIM model, the details, the specifications, the RFIs that reference it — because inconsistencies between these elements would confuse any machine learning algorithm.

Alternatively, a SENATOR-type approach (which we'll call ARCHINATOR because it sounds kinda sci-fi and slightly threatening), the process becomes almost elegant:

We know that, historically, CLT was chosen as the structural system. However, it’s not the only structural system that could have worked. A diagram showing alternate possibilities might look like so:

Both steel joist and concrete might have worked as a structural system, based on this particular project’s parameters. But they both seem like unlikely choices. ARCHINATOR can make the same assessment: based on the connective tissue within the knowledge graph, an ARCHINATOR could predict forward (in red) the likelihood that CLT would be chosen as a structural system, given this particular project architect, this particular jurisdiction, this particular client, and so forth. That’s your Monte Carlo Tree Search.

Simultaneously, it can predict backwards, noting that the known details in the existing documentation reflect a CLT system, strengthening the confidence that CLT was indeed the chosen system, like we saw in Scrabble.

Having confirmed that CLT was the chosen structural system, ARCHINATOR can finally predict vertically (in cyan), deducing that because there is a building section, and section details, that the gap in question should be a wall section.

So now ARCHINATOR knows that it has to specifically generate a wall section, based on a CLT structural system, in compliance with Oakland fire codes, LEED requirements, etc., and, taking additional contextual clues from the building sections and various sectional details, can assemble the missing wall section as synthetic data.

This is, of course, dramatically over-simplified. A proper architectural knowledge graph would include emails, meeting minutes, drawing standards, and all sorts of other things. The more data, and the more kinds of data one includes, the more accurate ARCHINATOR would be in determining where gaps are, and how to fill them. It’s the network effect that ultimately gives prediction power to a tool like SENATOR or ARCHINATOR.

Encoding Architectural Knowledge – A Way Forward

Knowledge graphs excel at relating different types of information and revealing connections between seemingly unrelated entities—showing how a client's offhand comment during an initial meeting might influence a material selection months later, or how a zoning variance affects bathroom layout. They mirror how architects actually think — in networks of interconnected decisions, each influencing and being influenced by others.

Are their benefits worth committing to a wholesale overhaul of how architects organize their data? That sounds like an a$$load of work. But probably less than 87.5 years’ worth ;-) And, unlike the traditional legacy project cleaning approach, this approach would be self-reinforcing, in the sense that the more you do, the easier it is to do the rest.

Building knowledge graphs for just a few years' worth of projects —recent enough to have good documentation but not so many as to be overwhelming—would create a foundation for expanding both forward and backward in time. Starting with basic node types (clients, contracts, jurisdictions, drawings, specifications, personnel) would establish a framework that grows more sophisticated and more powerful with each project added, evolving organically.

I would start with basic node types (client, contract type, jurisdiction, drawing, specification, personnel, etc.) and approach it like so:

If you’re still unconvinced, I can say this part with certainty: in the age of AI, we're all going to face a broad, binary choice:

We can try to train AI on the things we did before we knew AI was a thing—asking it to understand our roles, our artifacts, our customs, and our procedure - some of which were anachronistic to begin with.

We can rearrange our knowledge in a way that's more conducive to AI's learning and working.

An architectural knowledge graph speaks to the latter. SENATOR's ability to crawl through 27 million nodes and 53 million edges happens because the data is organized in a fashion that aligns with how AI thinks. SENATOR's ability to do that, in turn, enables quantum jumps in medical research, patient care, and other beneficial outcomes. What similar advances would be possible in architecture, if we reorganized our knowledge to work with AI rather than expecting AI to adapt to the strategically incomplete ways with which we’ve historically stored that knowledge?

Again, you would still need a human in the loop to review the synthetic data being created, at some level.